In the ongoing, albeit somewhat irregular, theme of technical posts we once again want to bring you a deep dive into a topic that is not often touched upon. This time the focus is on applying a microservice or clustering concept to a piece of software that really does its best toddler temper tantrum impression of not wanting to do its homework.

Microservices

In the world of “web applications” the containerization and clustering of applications through various concepts, layers and confusing config files is a landscape full of wonder and pretty explosions. For most, let’s call them websites from now on, since that is what they are, these setups are not all that useful, since they mostly apply to projects of vast scale. Nonetheless some still fall into the trap of pretty buzzwords and promised gains. Supposedly that is easier than to blame oneself for the code not being optimized or the hardware being overloaded as is. Microservices in most cases describe the concept of splitting a large application into smaller parts, each handling a specific task given the input, producing output. Going along with then clustering these across vast networks to more closely position them near the user and scaling them as markets grow or shrink. For large platforms with a thousands of users this makes the most economical sense, since the solution in the past was to simply slap the entire app onto every growing hardware, which just did not scale performance and cost all that evenly. Thus the concept of distributing load and splitting things into the smallest parts to make them more efficient has helped the internet grow and certain companies and platforms making billions while slashing their IT budget.

Load Balancing

Not a new concept by any means, but an every more important one these days. A single point of ingress for data into an application serving a wide range of potential sources means potential bottlenecks on the horizon. Equally then producing the output from that generally results in a cascade of ever slower processing until you hit the inevitable timeouts. Balancing this load through means of microservices or caching mechanisms is common practice not just in the world of websites. Any type of application, down to the very browser you are reading this through subscribe to the concept of load balancing in one way or another. At the core of the solution is spreading the load across any sort of multiplication that does not rely on other parts to process the data. In most programming languages this is known as asynchronous processing and generally tags along the object-orientated programming style that allows it to work in the first place. As a concept thus the idea is to allow all parts of an application to run and finish on their own time without causing the whole thing to grind to a halt, even if that, in the name of keeping the end results in sync, sometimes cannot be avoided either.

Where does OpenSim come into this though?

This is where it gets really interesting, because OpenSim has been built from the ground up to split individual processing into own parts that can run on their own. These individual services are often asynchronous as well and can even be split and distributed. This design allows both for applying the concept of microservices along with the load balancing that brings to it. However, that is easier said than done. As it turns out the interconnection between the services for the point of once in a while making sure all that asynchronous data actually makes any sense at all is not a straight forward affair. More so since changes and new features demand direct connections to other services that absolutely cannot wait for anything else to go on.

In the past there were attempts to resolve this by simply creating another process of OpenSim running as a sort-of backup to receive the same data and run it independently. Should the return then arrive faster than the main process, then it would be used instead. This went along with splitting services out into their own instances as well, but the resulting complexity and requirement to test each new change to not severely break the chain of data processing meant this project never really went anywhere beyond a working prototype.

That’s not to say the attempt itself did not emphasize the need to maintain the service-based setup of OpenSim. Thankfully for the lesser complex part of providing the main services that even connect the assortment of simulators to a conclusive world this has been maintained. What is commonly referred to as Robust services generally still has the ability to be split and even run as copies of each other. This leaves the door open for both applying the concept of microservices and load balancing to it. Though as already mentioned, there are a few things that managed to become rather large pitfalls to anyone looking to attempt it.

Robust, a simpleton with an attitude

To begin let’s go over the goals and requirements.

- Split as many services contained in Robust into their own instances

- For services with a potential to overload from data ingress or processing spawn multiple instances and distribute the load between them

- Setup connections to each instance in a manner that allows for effective load balancing and reduces the complexity of setup for simulators connecting to them

To achieve these goals we can use a few methods already available, some which require a bit of tinkering and some external systems that without nothing would work. Let’s go over each part.

Robust

With the aforementioned splitting in mind the basic configuration file for a single Robust instance already has a list of services it contains as well as their definitions further down below. All we thus have to do here is to select the services we want to run in each instance and make sure in the end we have instances for all of them. However, rather quickly this idea gets thrown out the window when looking at the actual service definitions. The problem sits in the connection services have with each other. While a lot of them point to them via either a local service definition or external connector, there still exist some that flat out assume a copy of the service is running in the same instance. So the difficulty is now up a notch trying to find the services that have to go together to share data.

Connectors

Most connected services refer to other services via the direct connection established over the addins present as part of the Robust system. We can see these as DLL files describing each service. However, in order to allow for multiple instances to communicate or indeed other parts of the entire software to communicate there exist Connectors. These are also DLLs, but their setup is somewhat different in that they provide a remote-bound connection to a service not defined by the addin, but a URL. This means we can change our service definitions to these Connectors to allow them to connect to a service running in a different instance.

Siblings

Splitting everything up into pieces is one part of resolving issues created from overloaded services, but eventually even that is no longer enough to handle the influx of data. As such applying the idea of load balancing by way of creating copies of an application becomes a requirement. Unfortunately this presents an issue when we want to make sure the individual copies are still able to share data with other parts. Whether this be in the form of connecting multiple services to a single dependency or the other way round. This is where we have to resort to external software to provide a way to group multiple instances of the same service under a common umbrella through which we can establish connections with it.

Includes

When attempting to setup a vast array of instances, each requiring their own little changes to configuration to interconnect properly we quickly run into less of an issue, but more a case of not getting brain freeze in the process. Writing a full configuration for each node requiring hundreds of lines each time to create all the necessary information for it to run is tedious and can easily produce mistakes. Thankfully this is something that has already annoyed at least one person before and to our advantage this person has done something about it. Configurations are capable of loading data from files and combine them into a fully qualified instance configuration. This means we can configure each service for connecting locally and remotely and simply mix and match the required parts via the architecture includes. We can now simply select what an instance is meant to run as local service and what it should connect to remotely.

Hacking

This is where it gets complex. In order to reduce load created from asking the same questions over and over again some services rely on caches. These will cache a request for certain data allowing it to be delivered without the need to retrieval from data storage. Unfortunately these caches are localized to the specific service, if we attempt to then multiply this service there is a chance for cached data corrupting actual data entered on a sibling. To combat this issue we have to go deep into OpenSim, find the caches and either remove them entirely or change their behavior to not be in use when multiple instances of a service are being run. In this case the better and more compatible option is to look for each part of the code that either requests or enters data into the caches and change these actions to be dependent on a flag set to either allow them or not, with the latter defaulting back to retrieving or storing data to the database directly as if the cache had no entry for it.

Long Term

As changes to main parts of OpenSim are still being made in order to update some of the ancient standards used when it was originally conceived along with new features requiring additional code the long term stability of this is still in question. Changes already made to some parts do already cause some instability and require long term testing as well as further changes to mitigate. As such this setup will likely require further “hacking” and even changes to the setup itself to account for changing service relations. As of yet it is unclear whether changes to the service interrelations to retain or even enhance the ability to split each service will be made, but we certainly hope so. Increasing data sizes and ever more growth will test the infrastructure and the more a setup can be spread and load distributed among the parts the more solid it will be in the future. As with everything it requires testing and more testing and ever more testing to identify issues, but as OpenSim is still in development that is frankly a given constant already.

The gritty bits

Having completed the crash course in Robust setup let’s create a hypothetical situation realistic enough to warrant creating a solution for.

Say we have to deal with over 10000 users logging in throughout the day, each having thousands of items in their inventory and being an overly active member of the community, chatting and roaming the world with vigor. How do we handle the influx of hundreds of requests per second?

Let’s go over each part.

1. Nginx

Nginx is a webserver with load balancing capabilities through the use of a proxy setup. This sounds complicated, but is actually relatively easy. What we need to do is setup a hostname for each individual type of service we want to run instances of. Then we pass requests from these hostnames onto a set of instances by passing the request over the ports used by those instances. This takes the form of server definitions with a proxy pass to the upstream ports used by the instance.

An example:

We can do this for all instances, multiple or singular, passing everything over a central port, thus making configuration of simulator connections relatively easy. Nginx handles routing the requests in a somewhat round robin style. This means it is not directly aware of the load placed on each copy, but we are changing the receiver for each request onto a different copy, which is likely enough. If necessary we can always add more copies.

2. Robust

In order to make it easier to run a large number of copies instead of multiplying the binary as a whole we simply treat it as template to spawn copies from. This requires providing each instance with the information of where the configuration should be loaded from. We do this by adding the inifile parameter to the execution command pointing it at a single file containing the aforementioned definitions and includes.

An example:

Configuring each service as normal making sure to use the Connectors for the remote counterparts. As mentioned above this structure looks confusing at first, but is actually a lot less work to do as we simply combine what we need rather than writing the config sections out in each file. Organizing the local connectors for services included in the specific robust instance we configure and the remote ones to connect to other robust instances in folders to make it easier to see what’s what.

3. Simulators

Connecting a simulator to this setup is remarkably easy given the complexity of what it is connected to. For the most part we can use the hostnames to connect the simulator services to their Robust providers. Only on select services, GridInfo in particular, a more direct connection is required. This also goes for external asset servers, which we hope will become more common as they are the second biggest bottlenecks in OpenSim.

A rough example of the setup:

Configuration depends on how you set things up and what type of service and instance split is done. As mentioned we don’t need to worry about setting up specific ports for each service as the individual parts are proxied through to their respective endpoints already, which also handles balancing load. The identification is no longer the port, but the hostname itself.

4. Runtime Environment

This section is somewhat optional, but may be of value in the future. A big issue with setting up so many individual services is handling them in case restarts and changes are required. As we are dealing with a program that runs independently we can simply push it to the background and nuke it whenever a restart is desired, but this might incur data loss. A better solution is providing a separate runtime environment for each instance. This can easily be accomplished under Windows by simply stuffing the window into a corner and forgetting about it, but as Windows is not a recommended platform to run services such as OpenSim, in Linux this is a bit more difficult. It is possible to simply send the process away as mentioned, but there is no way to interact or get it back other than sending data to it, which gives us no feedback. The better option is to use runtime environments, which are plentiful on Linux, such as docker or LXC for containers or more simply things like “screen”. The latter provides “windows” we can select at will to interact with each instance and both send commands, but also view the process working. Which one of these works best depends on familiarity and what level of separation you want for each service.

The Grand Solution

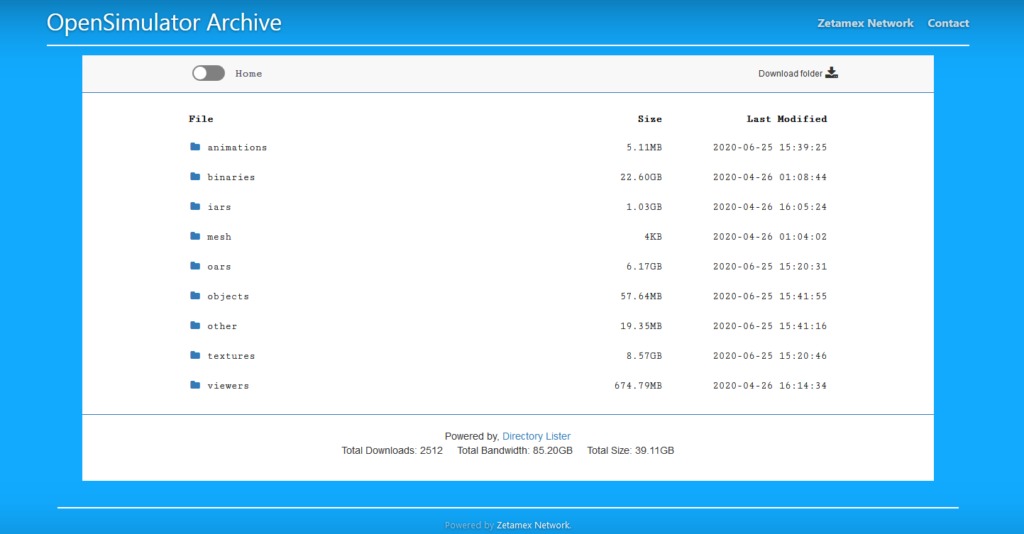

To test this setup we have created a testing environment running this setup:

- As previously mentioned all routed through Nginx to reduce the complexity of connecting simulators.

- Each instance having only minor changes to its configuration in the realm of setting the port to use.

- External and internal routing for service connections is also done with the proxy to reduce complexity of service interconnection and take advantage of full load balancing of all requests.

- Configurations based primarily on includes rather than full configuration files reducing complexity and clutter

- Spawning instances of a template binary to reduce complexity of upgrades

- Retaining simple configuration for simulators to services without the need to specify individual ports

- Splitting services logically based around encountered load and retaining services that have no ability to remotely connect to integrated services

- Minimal changes to OpenSim itself

This is obviously not the solution to all potential problems and there is no guarantee future changes won’t break a setup as complex as this. Certainly we hope for the opposite since there is only so much a single fully qualified instance of Robust can do on its own and hitting that limit is not a pretty sight.

Applying the concepts of microservice and load balancing in regards to OpenSim may seem wrong and there are certainly many obstacles in the way of doing so, but the core of it was always part of the idea behind the service-based setup of Robust or even OpenSim as a whole. Thus these concepts can work for it as well, despite the issues that exist due to inter-service dependencies and caching setups. It most certainly has ways to go to truly embrace them, but it is already possible to observe the positive aspects. Whether it is a setup as complex and distributed as shown here or simply splitting out one or two, the future undoubtedly lies in utilizing them.

Next to the brief mention of this capability on the official OpenSim wiki and some snippets of configuration options that can be found on the web this marks the first time it has been fully documented and tested. In the pursuit to fully dissect and test it we share the interest with a number of people, who have provided information, time and effort in testing as well. It shows the strong community spirit often found associated with opensource projects and we hope to propagate this to anyone reading this article. Instrumental in kicking this project off by providing the initial basis of configuration options and pointers to information we want to thank Gimisa Cerise who has been pulling apart the hidden and complex inner workings of OpenSim for a long time now. Equally we have to extend thanks to the OpenSim team for providing assistance in tracking down interconnected modules. The continuous effort put into the OpenSim project by everyone involved makes things like this possible in the first place; their ongoing support and work toward the project drives it forward and we are happy to be a part of it and contributing where possible.

We will certainly continue testing and pushing the boundaries of OpenSim to make sure it is prepared for the future and hope this insight into the capabilities it has will provide some positive impact on the metaverse as a whole.